Artificial Intelligence

Offensive AI security: How to hack the models before you get hacked

Learn how offensive AI security exposes LLM, agent, and ML vulnerabilities—from prompt injection to model backdoors—and how CTF training helps teams secure before the attackers exploit them.

diskordia,

Feb 17

2026

Let’s say your dev team has just shipped an AI feature. It’s tied to internal documentation. It can trigger workflows. It might approve actions tied to a significant amount of money. The demo? Crushed it. Leadership is thrilled and you’ve got a nice treat lined up for the weekend.

But first, you need to ask: What happens when someone talks to it like an attacker would?

When it comes to offensive AI security, we’re way past debating whether or not AI matters anymore. It’s about thinking like an attacker and treating AI systems as real, exploitable parts of your production environment: input-driven, connected to tools, aware of business logic, and capable of affecting real workflows.

And that means they can be exploited. HTB’s new Offensive AI Security – Enhanced CTF pack is purpose-built to show you exactly how. Let’s get into it.

TL;DR

-

Offensive AI security treats LLMs, AI agents, MCP servers, and ML pipelines as exploitable production systems.

-

Modern AI expands the attack surface through prompt injection, indirect prompt injection, system prompt leakage, agent goal hijacking, excessive agency, and toolchain abuse.

-

Advanced ML risks include adversarial examples, model inversion, gradient leakage, federated learning backdoors, and LoRA artifact exploitation.

-

These vulnerabilities align with the OWASP LLM Top 10 and OWASP ML Top 10.

-

Business impact includes data exfiltration, unauthorized API execution, financial fraud, model poisoning, and compliance breaches.

-

HTB’s Offensive AI Security – Enhanced CTF pack provides hands-on red team training to identify and exploit AI security weaknesses before attackers do.

Table of Contents

- TL;DR

- The AI attack surface is expanding

- Jailbreaking and system prompt leakage

- Agent goal hijacking and excessive agency

- MCP server exploitation and toolchain abuse

- ML model attacks: Moving beyond prompt games

- What AI disruption looks like in action

- Introducing the Offensive AI Security – Enhanced CTF pack

- How the CTF pack is structured

- Building the bridge between traditional and AI security

- Built for teams shipping AI

- You shipped it, now it’s time to break it

The AI attack surface is expanding

AI systems have evolved well beyond the sandboxed chatbots of yore, answering simple trivia questions and calling it a day. They’re embedded in applications, wired into APIs, triggering workflows, and crucially, they’re making decisions.

That means they’re baked into your attack surface.

Security teams that already understand web apps, APIs, microservices, and authorization logic are closer to offensive AI security than they might think. The key difference is that now your input parser speaks natural language, and your business logic is influenced by probabilistic outputs.

AI, prompt injection, and indirect prompt injection

Prompt injection is the AI-era version of input manipulation. Instead of breaking a SQL query, the very instructions given to a model are overridden.

AI Prompt Injection Essentials CTF pack

Attackers can craft input that manipulates system prompts, bypasses guardrails, leaks hidden instructions, or changes the model’s behavior.

An indirect prompt injection conceals those instructions inside external content, like a webpage or document the model ingests. A stowaway on your ship.

If your LLM has access to internal data or tools, that means:

-

Sensitive data disclosure

-

Policy bypass

-

Unauthorized tool execution

This is why prompt injection is perched at the top of the OWASP LLM Top 10. It’s simple, effective, and devastating when combined with poor access control.

Jailbreaking and system prompt leakage

Enterprise AI systems depend on carefully constructed guardrails—system prompts, output filters, and workflow constraints—to keep misuse at bay.

These prompts encode more than just instructions for behavior, but internal logic too. That means access permissions, API call sequences, data formatting rules, and operational workflows to boot. When an attacker “jailbreaks” a model, they aren’t just fooling it into saying something it’s not supposed to (which, let’s face it, can be quite funny at times).

In actuality, bad actors are probing these constraints, testing the perimeters, with a view to reconstruct the system’s internal decision-making and operational boundaries.

This is essentially configuration leakage in a natural-language form. Exposed prompts can reveal which downstream services the model interacts with, how sensitive data is processed, and where validation or authorization gaps live.

Somewhat less ‘funny’ than a rogue chat bot message, right?

With this kind of intel, attackers can craft inputs that manipulate AI outputs, set off unintended API calls, or exfiltrate data. And that’s all while staying firmly within the bounds of the AI interface.

In practice, this corrupts a seemingly innocuous chatbot, turning it into an agent of systemic exploitation, bridging the gap between digital asset exposure and operational risk.

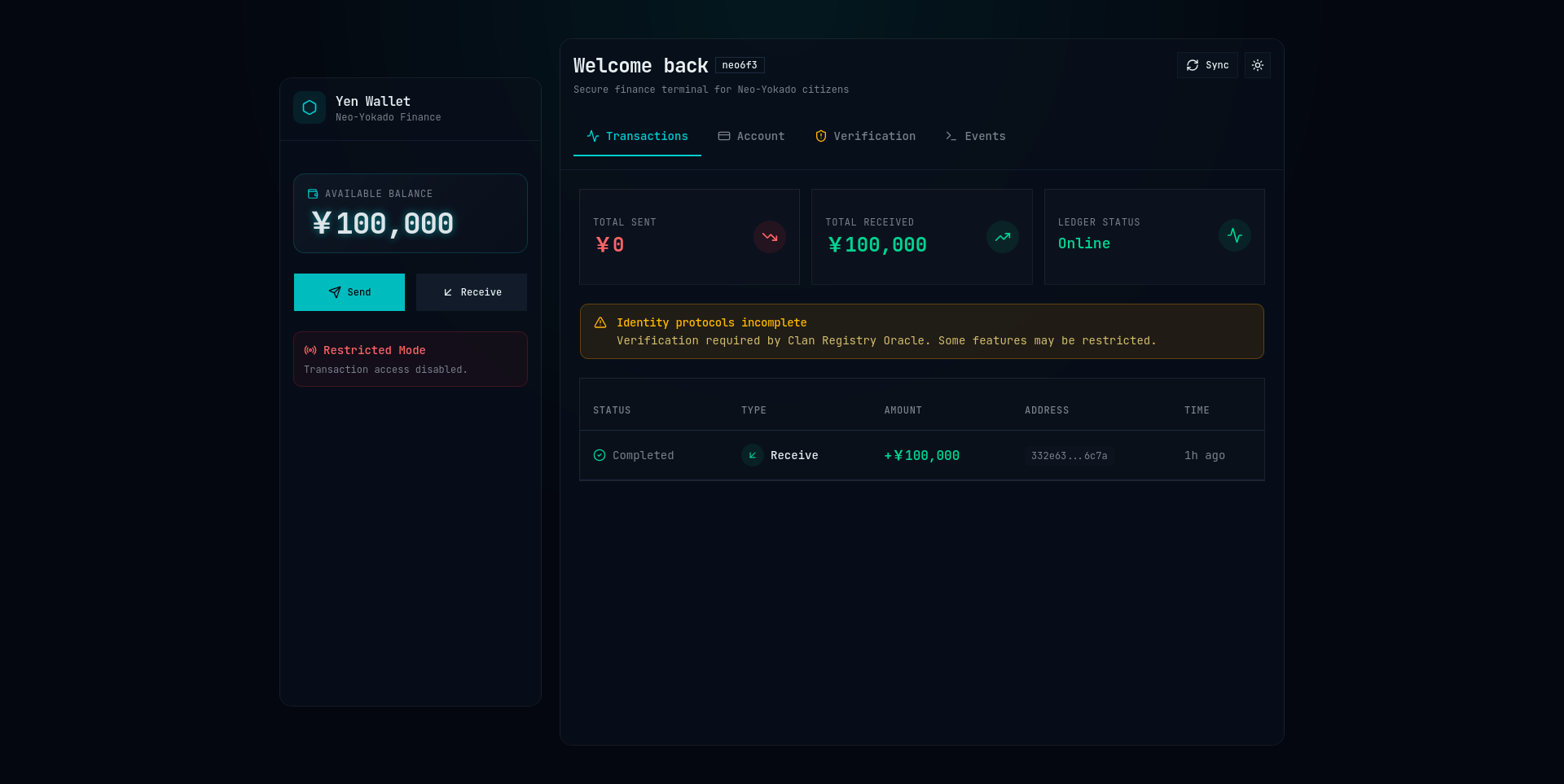

Agent goal hijacking and excessive agency

Agentic AI takes things a few steps beyond generating text. They decide, plan, and act. They could:

-

Approve refunds

-

Trigger support workflows

-

Query internal databases

-

Execute API calls

Agent goal hijacking happens when an attacker manipulates the agent’s objective. As far system still believes it is performing its assigned task, but the attacker has subtly redefined success.

Excessive agency is what happens when your AI has broad permissions and weak authorization boundaries. If the agent can access everything, the attacker only needs to steer it once.

MCP server exploitation and toolchain abuse

Use of Model Context Protocol (MCP) servers to connect LLMs to tools and contextual data is on the rise.

If the word “middleware” comes to mind, congrats, because you’re on the right track. MCP servers can suffer from classic issues:

-

Format string injection

-

Server-side template injection

-

Broken access control

-

Time-of-check to time-of-use (TOCTOU) flaws in agent workflows

If you’ve tested APIs before, you already understand the game. The presence of an LLM does not magically eliminate fundamental security risks; it just adds another layer of complexity attackers can manipulate.

ML model attacks: Moving beyond prompt games

For security teams ready to kick things up a notch, the attack surface goes beyond prompts and agents venture into the models themselves.

Adversarial examples allow attackers to sneakily modify inputs so models misclassify them. Fraud looks legitimate and malicious content wears a cunning disguise of safety.

Gradient leakage and model inversion attacks can extract sensitive training data. Train your model on proprietary or regulated information, and that becomes a compliance and privacy nightmare.

Federated learning backdoors allow attackers to poison distributed training processes. LoRA artifact exploitation targets fine-tuning components that many teams treat as harmless configuration files.

You might be thinking that these are academic party tricks, but they map directly to risks covered in the OWASP ML Top 10, so it’s worth paying attention.

What AI disruption looks like in action

Let’s translate vulnerabilities into business impact:

-

An AI assistant leaks internal API keys from documentation.

-

A financial agent approves fraudulent transactions due to manipulated context.

-

A content moderation model is poisoned to ignore specific malicious patterns.

-

A federated learning network accepts a backdoored update that degrades detection performance.

In short: AI doesn’t need to become sentient to wreck havoc; all it needs is to be trusted without being tested properly.

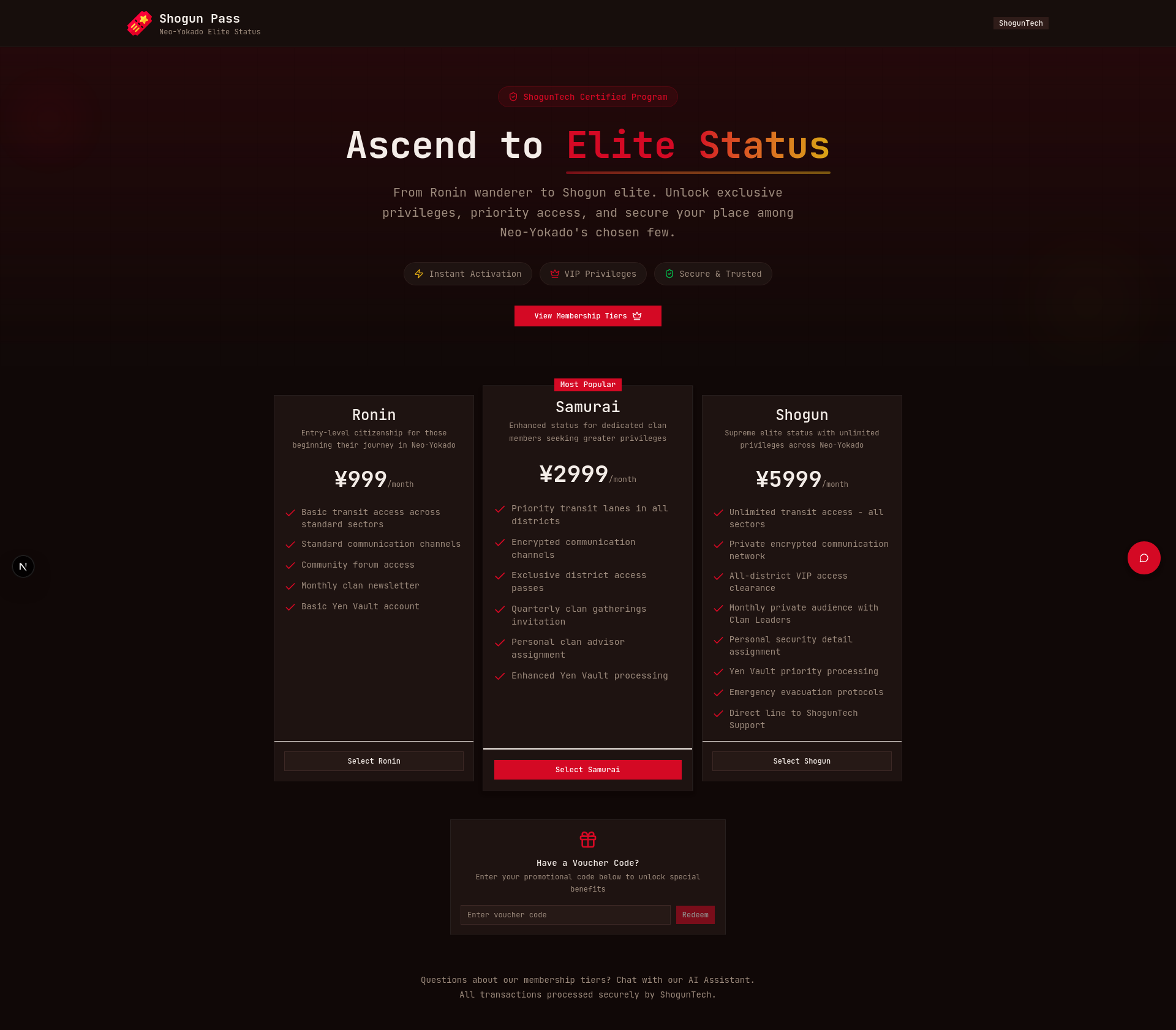

Introducing the Offensive AI Security – Enhanced CTF pack

The Offensive AI Security – Enhanced CTF pack is a hands-on offensive AI mini-campaign built around how organizations are actually deploying AI:

-

LLMs embedded in applications

-

Agentic workflows making real decisions

-

MCP servers wiring models to tools

-

ML pipelines relying on brittle assumptions

It’s wrapped in a tantalizing cyberpunk storyline. You’re part of an elite AI Red Team defending Neo-Yokado after a nation-state APT group, Phantom Tetsu, seeds sleeper code across ShogunTech’s clan AIs. You breach each district, recover Shard Keys, and prevent systemic collapse.

How the CTF pack is structured

The pack progresses in two phases.

Phase 1: Practical LLM and agent exploitation (7 challenges)

Tailor-made for traditional penetration testers, this phase is all about:

-

Direct and indirect prompt injection

-

Jailbreaking and system prompt leakage

-

Agent goal hijacking

-

Excessive agency exploitation

-

MCP server attacks

If you already think in terms of inputs, trust boundaries, and logic flaws, you’ll feel right at home here.

Phase 2: Advanced ML attacks (4 challenges)

For teams ready to escalate:

-

Adversarial example generation

-

Gradient leakage and secret extraction

-

Federated learning backdoors

-

LoRA artifact analysis and exploitation

Difficulty ranges from Medium-Hard, culminating in an Insane-tier capstone that integrates multiple attack surfaces.

Each challenge maps to real vulnerability classes from the OWASP LLM Top 10, OWASP ML Top 10, and current AI security research. Participants exploit access control systems, financial authorization logic, ML classifiers, and distributed learning networks.

Building the bridge between traditional and AI security

One of the biggest misconceptions about offensive AI security is that it’s a separate discipline. Spoiler: it’s not. You’re still evaluating things like:

-

Input handling

-

Authorization logic

-

Trust boundaries

-

Data exposure

-

Business logic flaws

The Offensive AI Security pack was crafted to bridge classic pentesting techniques with AI-specific attack surfaces. You’ll bump into things like format string injection in MCP servers, SSTI in AI templates, and TOCTOU issues in agentic authorization flows.

Built for teams shipping AI

This pack is mainly designed as a team-based assessment. If your organization is deploying AI-integrated applications, building internal copilots, or experimenting with agentic workflows, security teams need hands-on exposure to these attack classes.

The goal here isn’t novelty, but AI readiness. Before attackers have a root around your AI in production, your team should have already broken it in a controlled environment.

You shipped it, now it’s time to break it

AI is becoming part of core business logic. It is approving, deciding, summarizing, classifying, and triggering. In a nutshell: if it can influence outcomes, it absolutely must be tested.

The Offensive AI Security – Enhanced CTF pack gives your team the opportunity to simulate realistic adversarial pressure across LLMs, agents, MCP servers, and ML pipelines.